We share the latest exam dumps throughout the year to help you improve your skills and experience! The latest Microsoft other Certification DP-200 exam dumps, online exam Practice test to test your strength, Microsoft DP-200 “Exam DP-200: Implementing an Azure Data Solution”

in https://www.leads4pass.com/dp-200.html Update the exam content throughout the year to ensure that all exam content is authentic and valid.

DP-200 PDF Online download for easy learning.

[PDF] Free Microsoft other Certification DP-200 pdf dumps download from Google Drive: https://drive.google.com/open?id=1H70200WCZAc8N43RdlP4JVsNXdOm0D2U

[PDF] Free Full Microsoft pdf dumps download from Google Drive: https://drive.google.com/open?id=1AwBFPqkvdpJBfxdZ3nGjtkHQZYdBsRVz

Exam DP-200: Implementing an Azure Data Solution (beta) – Microsoft: https://www.microsoft.com/en-us/learning/exam-dp-200.aspx

Exam DP-200: Implementing an Azure Data Solution

Candidates for this exam are Azure data engineers who are responsible for data related tasks that include ingesting, egressing, and transforming data from multiple sources using various services and tools. The Azure data engineer collaborates with business stakeholders to identify and meet data requirements while designing and implementing the management, monitoring, security,and privacy of data using the full stack of Azure services to satisfy business needs.

Implement data storage solutions (25-30%)

Implement Azure cloud data warehouses

Implement No-SQL Databases

Implement Azure SQL Database

Implement hybrid data scenarios

Manage Azure DevOps Pipelines

Manage and develop data processing (30-35%)

Implement big data environments

Develop batch processing solutions

Develop streaming solutions

Develop integration solutions

Implement data migration

Automate Data Factory Pipelines

Manage data security (15-20%)

Manage source data access security

Configure authentication and authorization

Manage and enforce data policies and standards

Set up notifications

Monitor data solutions (10-15%)

Monitor data storage

Monitor databases for a specified scenario

Monitor data processing

Manage and troubleshoot Azure data solutions (10-15%)

Manage Optimization

Manage business continuity

Free test Microsoft other Certification DP-200 Exam questions and Answers

QUESTION 1

Note: This question is part of series of questions that present the same scenario. Each question in the series contains a

unique solution. Determine whether the solution meets the stated goals.

You develop data engineering solutions for a company.

A project requires the deployment of resources to Microsoft Azure for batch data processing on Azure HDInsight. Batch

processing will run daily and must:

Scale to minimize costs

Be monitored for cluster performance

You need to recommend a tool that will monitor clusters and provide information to suggest how to scale.

Solution: Monitor cluster load using the Ambari Web UI.

Does the solution meet the goal?

A. Yes

B. No

Correct Answer: B

Ambari Web UI does not provide information to suggest how to scale.

Instead monitor clusters by using Azure Log Analytics and HDInsight cluster management solutions.

References:

https://docs.microsoft.com/en-us/azure/hdinsight/hdinsight-hadoop-oms-log-analytics-tutorial

https://docs.microsoft.com/en-us/azure/hdinsight/hdinsight-hadoop-manage-ambari

QUESTION 2

You need to ensure that phone-based polling data can be analyzed in the PollingData database.

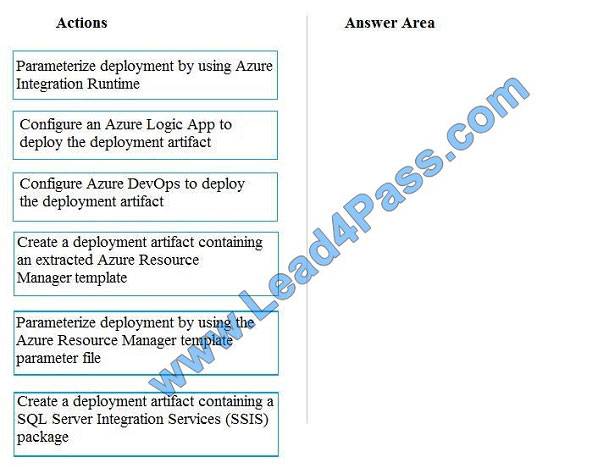

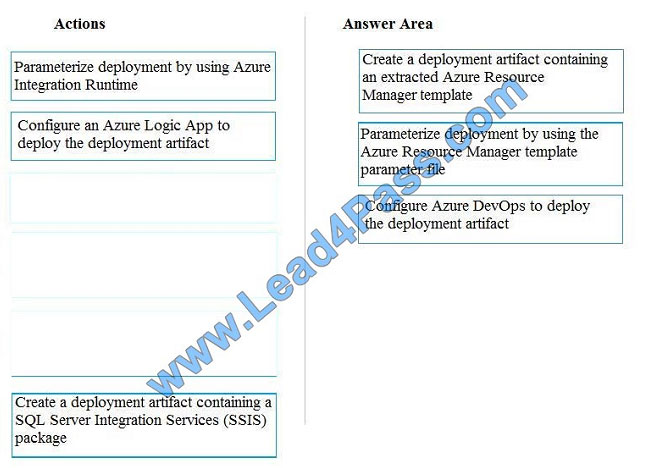

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions

to the answer are and arrange them in the correct order.

Select and Place:

Correct Answer:

All deployments must be performed by using Azure DevOps. Deployments must use templates used in multiple

All deployments must be performed by using Azure DevOps. Deployments must use templates used in multiple

environments No credentials or secrets should be used during deployments

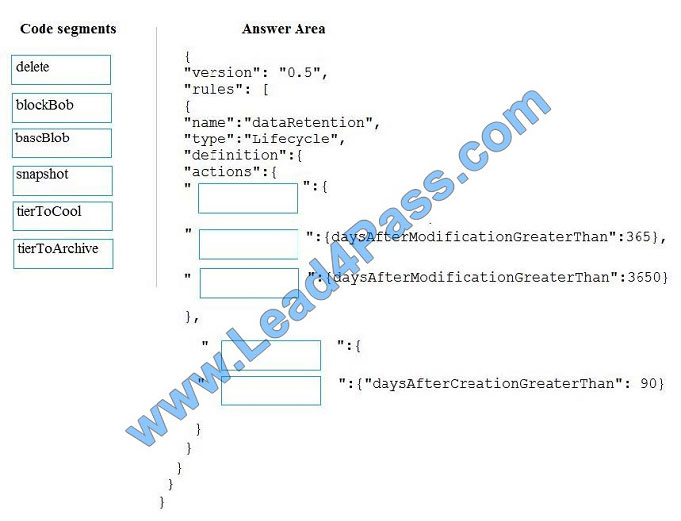

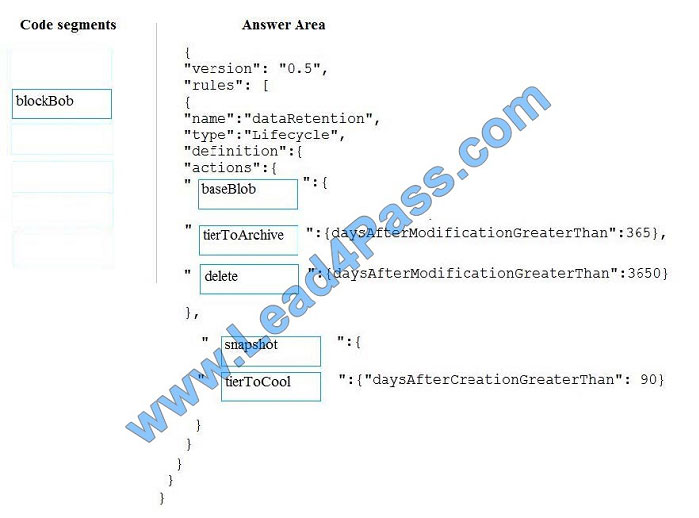

QUESTION 3

You manage a financial computation data analysis process. Microsoft Azure virtual machines (VMs) run the process in

daily jobs, and store the results in virtual hard drives (VHDs.)

The VMs product results using data from the previous day and store the results in a snapshot of the VHD. When a new

month begins, a process creates a new VHD.

You must implement the following data retention requirements:

Daily results must be kept for 90 days Data for the current year must be available for weekly reports Data from the

previous 10 years must be stored for auditing purposes Data required for an audit must be produced within 10 days of a

request.

You need to enforce the data retention requirements while minimizing cost.

How should you configure the lifecycle policy? To answer, drag the appropriate JSON segments to the correct locations.

Each JSON segment may be used once, more than once, or not at all. You may need to drag the split bat between

panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Select and Place: Correct Answer:

Correct Answer:

The Set-AzStorageAccountManagementPolicy cmdlet creates or modifies the management policy of an Azure Storage

The Set-AzStorageAccountManagementPolicy cmdlet creates or modifies the management policy of an Azure Storage

account.

Example: Create or update the management policy of a Storage account with ManagementPolicy rule objects.

Example: Create or update the management policy of a Storage account with ManagementPolicy rule objects.

Action -BaseBlobAction Delete -daysAfterModificationGreaterThan 100 PS C:\>$action1 = Add-

AzStorageAccountManagementPolicyAction -InputObject $action1 -BaseBlobAction TierToArchive

-daysAfterModificationGreaterThan 50 PS C:\>$action1 = Add-AzStorageAccountManagementPolicyAction -InputObject

$action1 -BaseBlobAction TierToCool -daysAfterModificationGreaterThan 30 PS C:\>$action1 = Add-

AzStorageAccountManagementPolicyAction -InputObject $action1 -SnapshotAction Delete

-daysAfterCreationGreaterThan 100 PS C:\>$filter1 = New-AzStorageAccountManagementPolicyFilter -PrefixMatch

ab,cd PS C:\>$rule1 = New-AzStorageAccountManagementPolicyRule -Name Test -Action $action1 -Filter $filter1

PS C:\>$action2 = Add-AzStorageAccountManagementPolicyAction -BaseBlobAction Delete

-daysAfterModificationGreaterThan 100 PS C:\>$filter2 = New-AzStorageAccountManagementPolicyFilter

References: https://docs.microsoft.com/en-us/powershell/module/az.storage/set-azstorageaccountmanagementpolicy

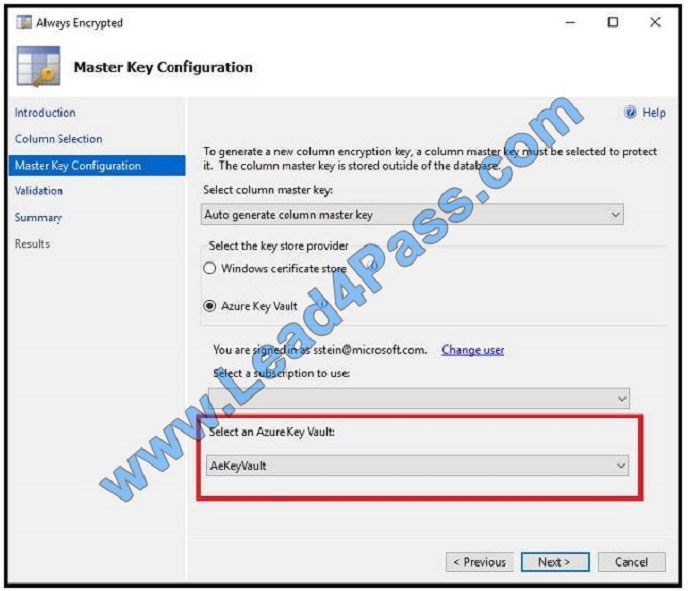

QUESTION 4

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains

a unique solution that might meet the stated goals. Some questions sets might have more than one correct solution,

while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not

appear in the review screen.

You need to configure data encryption for external applications.

Solution:

1.

Access the Always Encrypted Wizard in SQL Server Management Studio

2.

Select the column to be encrypted

3.

Set the encryption type to Deterministic

4.

Configure the master key to use the Windows Certificate Store

5.

Validate configuration results and deploy the solution

Does the solution meet the goal?

A. Yes

B. No

Correct Answer: B

Use the Azure Key Vault, not the Windows Certificate Store, to store the master key.

Note: The Master Key Configuration page is where you set up your CMK (Column Master Key) and select the key store

provider where the CMK will be stored. Currently, you can store a CMK in the Windows certificate store, Azure Key

Vault, or a hardware security module (HSM). References: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-always-encrypted-azure-key-vault

References: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-always-encrypted-azure-key-vault

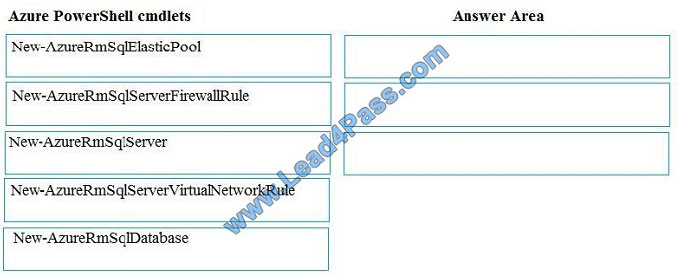

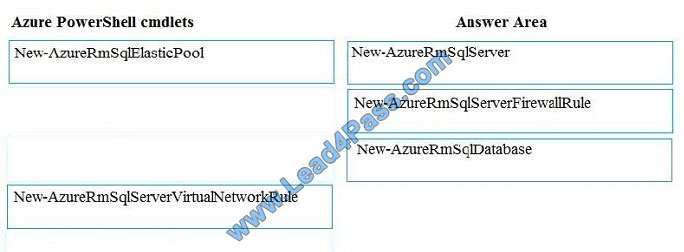

QUESTION 5

You plan to create a new single database instance of Microsoft Azure SQL Database.

The database must only allow communication from the data engineer\\’s workstation. You must connect directly to the

instance by using Microsoft SQL Server Management Studio.

You need to create and configure the Database. Which three Azure PowerShell cmdlets should you use to develop the

solution? To answer, move the appropriate cmdlets from the list of cmdlets to the answer area and arrange them in the

correct order.

Select and Place: Correct Answer:

Correct Answer:

Step 1: New-AzureSqlServer

Step 1: New-AzureSqlServer

Create a server.

Step 2: New-AzureRmSqlServerFirewallRule

New-AzureRmSqlServerFirewallRule creates a firewall rule for a SQL Database server.

Can be used to create a server firewall rule that allows access from the specified IP range.

Step 3: New-AzureRmSqlDatabase

Example: Create a database on a specified server

PS C:\>New-AzureRmSqlDatabase -ResourceGroupName “ResourceGroup01” -ServerName “Server01”

-DatabaseName “Database01

References: https://docs.microsoft.com/en-us/azure/sql-database/scripts/sql-database-create-and-configure-database-powershell?toc=%2fpowershell%2fmodule%2ftoc.json

QUESTION 6

A company plans to use Azure Storage for file storage purposes. Compliance rules require: A single storage account to

store all operations including reads, writes and deletes Retention of an on-premises copy of historical operations

You need to configure the storage account.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Configure the storage account to log read, write and delete operations for service type Blob

B. Use the AzCopy tool to download log data from $logs/blob

C. Configure the storage account to log read, write and delete operations for service-type table

D. Use the storage client to download log data from $logs/table

E. Configure the storage account to log read, write and delete operations for service type queue

Correct Answer: AB

Storage Logging logs request data in a set of blobs in a blob container named $logs in your storage account. This

container does not show up if you list all the blob containers in your account but you can see its contents if you access it

directly.

To view and analyze your log data, you should download the blobs that contain the log data you are interested in to a

local machine. Many storage-browsing tools enable you to download blobs from your storage account; you can also use

the Azure Storage team provided command-line Azure Copy Tool (AzCopy) to download your log data.

References: https://docs.microsoft.com/en-us/rest/api/storageservices/enabling-storage-logging-and-accessing-log-

data

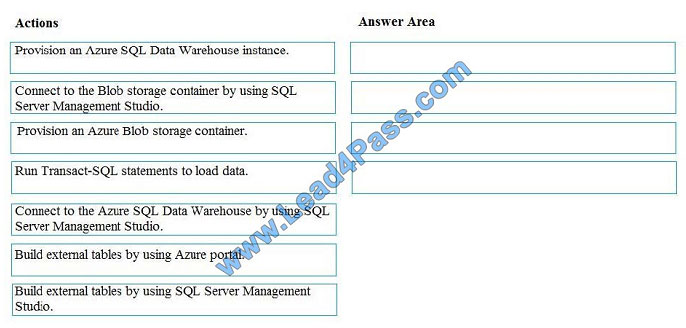

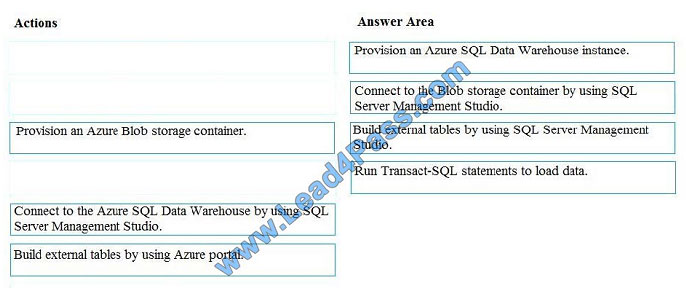

QUESTION 7

You develop data engineering solutions for a company. You must migrate data from Microsoft Azure Blob storage to an

Azure SQL Data Warehouse for further transformation. You need to implement the solution. Which four actions should

you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and

arrange them in the correct order.

Select and Place: Correct Answer:

Correct Answer:

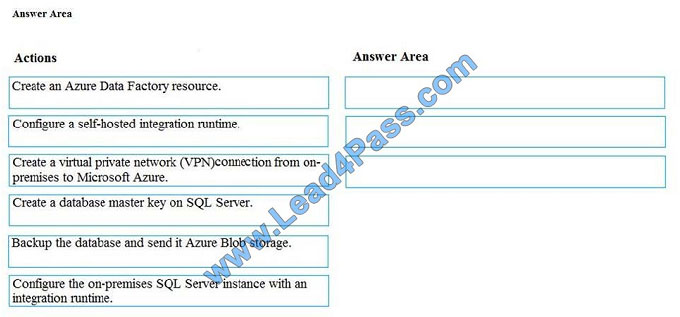

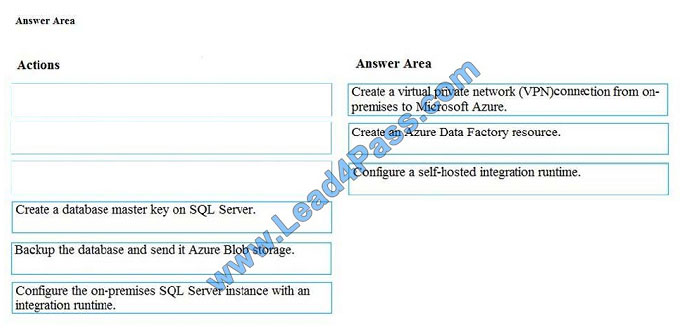

QUESTION 8

Your company manages on-premises Microsoft SQL Server pipelines by using a custom solution.

The data engineering team must implement a process to pull data from SQL Server and migrate it to Azure Blob

storage. The process must orchestrate and manage the data lifecycle.

You need to configure Azure Data Factory to connect to the on-premises SQL Server database.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions

to the answer area and arrange them in the correct order.

Select and Place: Correct Answer:

Correct Answer:

Step 1: Create a virtual private network (VPN) connection from on-premises to Microsoft Azure.

Step 1: Create a virtual private network (VPN) connection from on-premises to Microsoft Azure.

You can also use IPSec VPN or Azure ExpressRoute to further secure the communication channel between your on-

premises network and Azure.

Azure Virtual Network is a logical representation of your network in the cloud. You can connect an on-premises network

to your virtual network by setting up IPSec VPN (site-to-site) or ExpressRoute (private peering).

Step 2: Create an Azure Data Factory resource.

Step 3: Configure a self-hosted integration runtime.

You create a self-hosted integration runtime and associate it with an on-premises machine with the SQL Server

database. The self-hosted integration runtime is the component that copies data from the SQL Server database on your

machine

to Azure Blob storage.

Note: A self-hosted integration runtime can run copy activities between a cloud data store and a data store in a private

network, and it can dispatch transform activities against compute resources in an on-premises network or an Azure

virtual

network. The installation of a self-hosted integration runtime needs on an on-premises machine or a virtual machine

(VM) inside a private network.

References:

https://docs.microsoft.com/en-us/azure/data-factory/tutorial-hybrid-copy-powershell

QUESTION 9

You plan to use Microsoft Azure SQL Database instances with strict user access control. A user object must: Move with

the database if it is run elsewhere Be able to create additional users

You need to create the user object with correct permissions.

Which two Transact-SQL commands should you run? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. ALTER LOGIN Mary WITH PASSWORD = \\’strong_password\\’;

B. CREATE LOGIN Mary WITH PASSWORD = \\’strong_password\\’;

C. ALTER ROLE db_owner ADD MEMBER Mary;

D. CREATE USER Mary WITH PASSWORD = \\’strong_password\\’;

E. GRANT ALTER ANY USER TO Mary;

Correct Answer: CD

C: ALTER ROLE adds or removes members to or from a database role, or changes the name of a user-defined

database role.

Members of the db_owner fixed database role can perform all configuration and maintenance activities on the database,

and can also drop the database in SQL Server.

D: CREATE USER adds a user to the current database.

Note: Logins are created at the server level, while users are created at the database level. In other words, a login allows

you to connect to the SQL Server service (also called an instance), and permissions inside the database are granted to

the database users, not the logins. The logins will be assigned to server roles (for example, serveradmin) and the

database users will be assigned to roles within that database (eg. db_datareader, db_bckupoperator).

References: https://docs.microsoft.com/en-us/sql/t-sql/statements/alter-role-transact-sql

https://docs.microsoft.com/en-us/sql/t-sql/statements/create-user-transact-sql

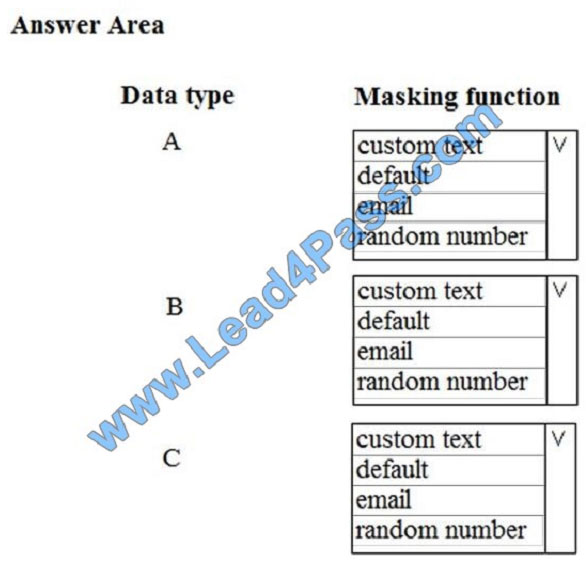

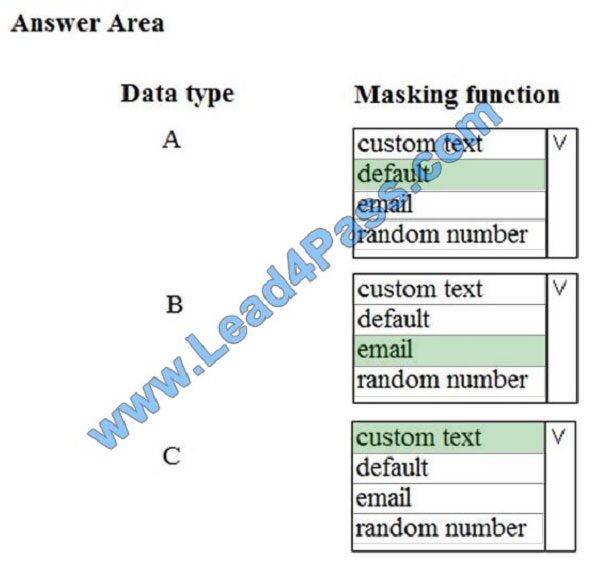

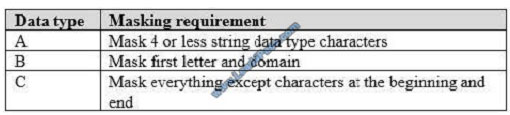

QUESTION 10

You need to mask tier 1 data. Which functions should you use? To answer, select the appropriate option in the answer

area. NOTE: Each correct selection is worth one point.

Hot Area: Correct Answer:

Correct Answer:

A: Default

A: Default

Full masking according to the data types of the designated fields.

For string data types, use XXXX or fewer Xs if the size of the field is less than 4 characters (char, nchar, varchar,

nvarchar, text, ntext).

B: email

C: Custom text

Custom StringMasking method which exposes the first and last letters and adds a custom padding string in the middle.

prefix,[padding],suffix

Tier 1 Database must implement data masking using the following masking logic:

References: https://docs.microsoft.com/en-us/sql/relational-databases/security/dynamic-data-masking

References: https://docs.microsoft.com/en-us/sql/relational-databases/security/dynamic-data-masking

QUESTION 11

A company is designing a hybrid solution to synchronize data and on-premises Microsoft SQL Server database to Azure

SQL Database.

You must perform an assessment of databases to determine whether data will move without compatibility issues. You

need to perform the assessment.

Which tool should you use?

A. SQL Server Migration Assistant (SSMA)

B. Microsoft Assessment and Planning Toolkit

C. SQL Vulnerability Assessment (VA)

D. Azure SQL Data Sync

E. Data Migration Assistant (DMA)

Correct Answer: E

The Data Migration Assistant (DMA) helps you upgrade to a modern data platform by detecting compatibility issues that

can impact database functionality in your new version of SQL Server or Azure SQL Database. DMA recommends

performance and reliability improvements for your target environment and allows you to move your schema, data, and

uncontained objects from your source server to your target server.

References: https://docs.microsoft.com/en-us/sql/dma/dma-overview

QUESTION 12

You are the data engineer for your company. An application uses a NoSQL database to store data. The database uses

the key-value and wide-column NoSQL database type.

Developers need to access data in the database using an API.

You need to determine which API to use for the database model and type.

Which two APIs should you use? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

A. Table API

B. MongoDB API

C. Gremlin API

D. SQL API

E. Cassandra API

Correct Answer: BE

B: Azure Cosmos DB is the globally distributed, multimodel database service from Microsoft for mission-critical

applications. It is a multimodel database and supports document, key-value, graph, and columnar data models.

E: Wide-column stores store data together as columns instead of rows and are optimized for queries over large

datasets. The most popular are Cassandra and HBase.

References: https://docs.microsoft.com/en-us/azure/cosmos-db/graph-introduction

https://www.mongodb.com/scale/types-of-nosql-databases

QUESTION 13

You need to process and query ingested Tier 9 data.

Which two options should you use? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Azure Notification Hub

B. Transact-SQL statements

C. Azure Cache for Redis

D. Apache Kafka statements

E. Azure Event Grid

F. Azure Stream Analytics

Correct Answer: EF

Explanation:

Event Hubs provides a Kafka endpoint that can be used by your existing Kafka based applications as an alternative to

running your own Kafka cluster.

You can stream data into Kafka-enabled Event Hubs and process it with Azure Stream Analytics, in the following steps:

Create a Kafka enabled Event Hubs namespace.

Create a Kafka client that sends messages to the event hub.

Create a Stream Analytics job that copies data from the event hub into an Azure blob storage.

Scenario: Tier 9 reporting must be moved to Event Hubs, queried, and persisted in the same Azure region as the company’s main

Tier 9 reporting must be moved to Event Hubs, queried, and persisted in the same Azure region as the company’s main

office

References: https://docs.microsoft.com/en-us/azure/event-hubs/event-hubs-kafka-stream-analytics

We share 13 of the latest Microsoft other Certification DP-200 exam dumps and DP-200 pdf online download for free. Now you know what you’re capable of! If you’re just interested in this, please keep an eye on “Allaboutexams.com” blog updates! If you want to get the Microsoft other Certification DP-200 Exam Certificate: https://www.leads4pass.com/dp-200.html (Total questions:58 Q&A).

Related DP-200 Exam Resources

| title | youtube | Exam DP-200: Implementing an Azure Data Solution (beta) – Microsoft | leads4pass | |

|---|---|---|---|---|

| cisco DP-200 | leads4pass DP-200 dumps pdf | leads4pass DP-200 youtube | Exam DP-200: Implementing an Azure Data Solution (beta) – Microsoft | https://www.leads4pass.com/dp-200.html |

| Microsoft other Certification | https://www.leads4pass.com/ai-100.html | |||

| https://www.leads4pass.com/md-100.html | ||||

| https://www.leads4pass.com/ms-100.html | ||||

| https://www.leads4pass.com/ms-101.html | ||||

| https://www.leads4pass.com/ms-202.html | ||||

| https://www.leads4pass.com/ms-500.html | ||||

| https://www.leads4pass.com/ms-900.html |

leads4pass Promo Code 12% Off

Why Choose leads4pass?

leads4pass helps you pass the exam easily! We compare data from all websites in the network, other sites are expensive,

and the data is not up to date, leads4pass updates data throughout the year. The pass rate of the exam is above 98.9%.